Rule 110 in WebGPU

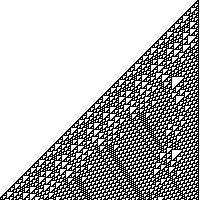

If you’re running the latest Google Chrome Canary, and you’ve enabled the “Unsafe WebGPU” experiment, then you should two funny triangles below. The first is just an image; the second is calculated with WebGPU, a bleeding-edge, partially implemented web browser feature. Most of you reading this won’t have this feature enabled, so you’ll just see the image.

The image is Rule 110, a cellular automaton. It’s calculated using this GLSL:

#version 450

layout(std430, set = 0, binding = 0) buffer StateInMatrix { uint size; uint cells[]; } stateIn;

layout(std430, set = 0, binding = 1) buffer StateOutMatrix { uint size; uint cells[]; } stateOut;

uint s(uint i) {

return i < stateIn.size ? stateIn.cells[i] : 0;

}

void main() {

uint x = gl_GlobalInvocationID.x;

uint n = (s(x-1) << 2) | (s(x) << 1) | s(x+1);

uint newState = (n == 6 || n == 5 || n == 3 || n == 2 || n == 1) ? 1 : 0;

stateOut.cells[gl_GlobalInvocationID.x] = newState;

}This GLSL runs one step of a simulated world,

from stateIn to stateOut.

The main function runs once for every cell in the world.

At the time of writing,

Google Chrome hasn’t implemented any way to draw GPU buffers to the screen.

But it does let you extract a buffer as an ArrayBuffer.

So instead,

I use a 2d canvas context, and call ctx.putImageData with that ArrayBuffer.

I use some more GLSL to render the state to a pixel buffer,

in a format that can be written to a canvas:

#version 450

layout(std430, set = 0, binding = 0) buffer StateMatrix { uint size; uint cells[]; } state;

layout(std430, set = 0, binding = 1) buffer ScreenMatrix { uint pixels[]; } screen;

uint rgba(uint r, uint g, uint b, uint a) {

// Note "little-endian" order of uints!

return a<<24 | b<<16 | g<<8 | r;

}

void main() {

uint x = gl_GlobalInvocationID.x;

screen.pixels[x] = state.cells[x] == 0 ? rgba(255,255,255,255) : rgba(0,0,0,255);

}For some reason, the simulation is really slow. It takes around a second to render this 400px canvas. Maybe I’m doing something wrong, or this WebGPU implementation is very inefficient. I’m sure it would be faster in vanilla JavaScript.

The WebGPU API is pretty low-level. I needed ~150 lines of JavaScript to run this simulation. I won’t explain it all here - not least because I don’t understand it all. I recommend reading “Get started with GPU Compute on the Web”, which is where I started.

This page copyright James Fisher 2020. Content is not associated with my employer.

Granola

Granola