Using BodyPix segmentation in a WebGL shader

In the previous post I showed how to run BodyPix on a video stream, displaying the segmentation using the library’s convenience functions. But if you want to use the segmentation as part of your WebGL rendering pipeline, you need to access the segmentation from your shader. In this post, I demo a pixel shader that sets the alpha channel of a canvas based on a BodyPix segmentation. The demo shows your webcam feed in the bottom-right corner of this page with alpha-transparency taken from BodyPix.

A call to net.segmentPerson returns something like this:

{

allPoses: [...],

data: Uint8Array(307200) [...],

height: 480,

width: 640,

}There is one byte for each pixel: note 640*480 == 307200.

These are in row-major order,

so pixel (x,y) is at y*640 + x,

where (0,0) is the top-left of the image.

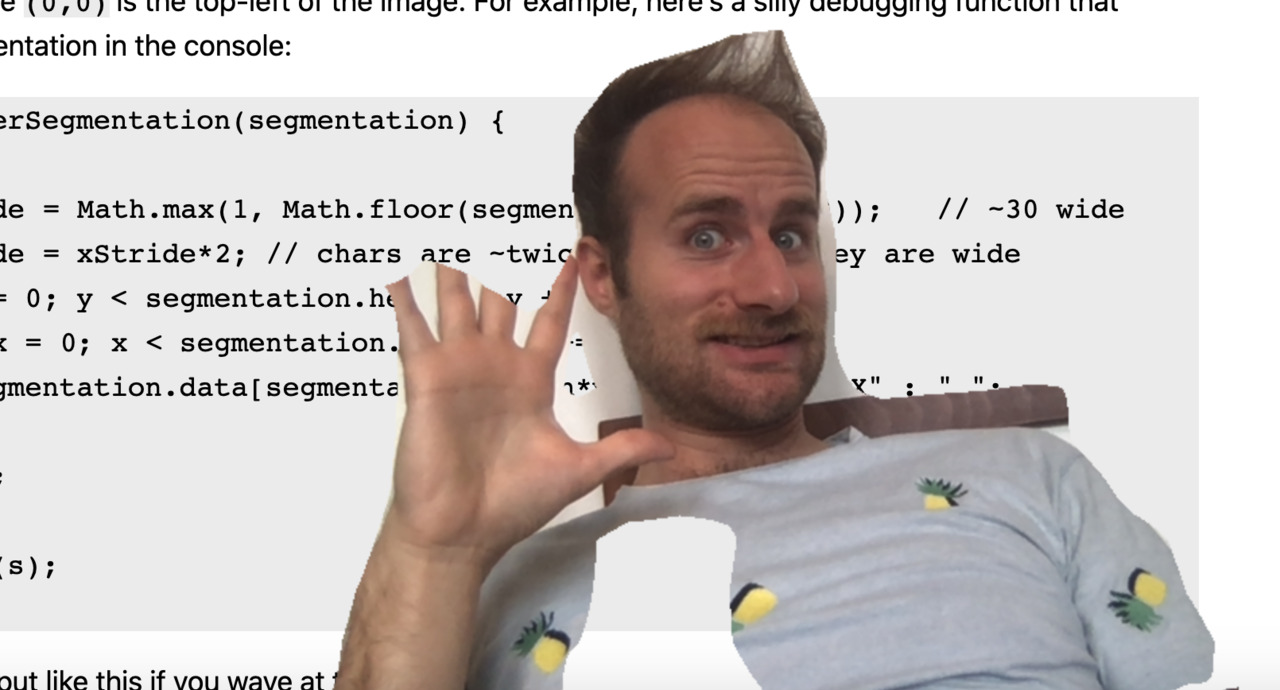

For example, here’s a silly debugging function that renders the segmentation in the console:

function renderSegmentation(segmentation) {

let s = "";

const xStride = Math.max(1, Math.floor(segmentation.width/30)); // ~30 wide

const yStride = xStride*2; // chars are ~twice as tall as they are wide

for (let y = 0; y < segmentation.height; y += yStride) {

for (let x = 0; x < segmentation.width; x += xStride) {

s += segmentation.data[segmentation.width*y + x] == 1 ? "X" : " ";

}

s += "\n";

}

console.log(s);

}It will give you output like this if you wave at the camera:

XXXXX XX

XXXXXXX XXXXX

XXXXXXX XXXXXX

XXXXXXX XXXXX

XXXXX XXXXX

XXXXXX XXXXX

XXXXXXXXXXXXXXX XXXX

XXXXXXXXXXXXXXXXXXXXX XXXXX

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXTo access this data in a WebGL shader,

we need to get it into a texture using gl.texImage2D.

When you pass an array to gl.texImage2D,

you tell it which format to interpret it as.

One possible format is gl.ALPHA,

which has one byte per pixel -- the same as the format given to us by BodyPix.

This byte interpreted as the alpha channel when the texture is accessed by a shader.

Here’s how to load the segmentation data into a texture:

gl.texImage2D(

gl.TEXTURE_2D, // target

0, // level

gl.ALPHA, // internalformat

segmentation.width, // width

segmentation.height, // height

0, // border, "Must be 0"

gl.ALPHA, // format, "must be the same as internalformat"

gl.UNSIGNED_BYTE, // type of data below

segmentation.data // pixels

);Unfortunately, the byte values given by BodyPix are 0 and 1,

rather than the ideal 0 and 255.

But we can correct for this in our fragment shader:

precision mediump float;

uniform sampler2D frame;

uniform sampler2D mask;

uniform float texWidth;

uniform float texHeight;

void main(void) {

vec2 texCoord = vec2(gl_FragCoord.x/texWidth, 1.0 - (gl_FragCoord.y/texHeight));

gl_FragColor = vec4(texture2D(frame, texCoord).rgb, texture2D(mask, texCoord).a * 255.);

}Here’s what I get when I run the demo against my own webcam feed:

As you can see, BodyPix still has a number of quality issues. In priority order:

- BodyPix doesn’t realize my body extends beyond the bottom of the image. It might be possible to improve this by fudging the input or output.

- It’s really bad at recognizing fingers. It might be possible to improve this by running Handpose on the detected palms.

This page copyright James Fisher 2020. Content is not associated with my employer.

Granola

Granola